How Many Interviews for Residency Comminity Family Medicine

Abstract

Background and Objectives: Resident recruitment is i of the about important responsibilities of residency programs. Resource demands are amid the principal reasons for calls for recruitment reform. The purpose of this written report was to provide a national snapshot of estimated costs of recruitment among US family medicine programs. The aim was to provide information to assist programs in securing and allocating resources to manage the increasingly challenging recruitment process.

Methods: Questions were part of a larger omnibus survey conducted by the Council of Bookish Family Medicine (CAFM) Educational Inquiry Alliance (CERA). Specific questions were asked regarding how many interviews each program offered and completed; interview budget; boosted funds spent on recruitment; reimbursements; and resident, kinesthesia, and staff hours used per interviewee.

Results: The response rate was 53% (277/522). Program directors estimated that residents devoted 6.four hours (95% CI 6, 7) to each interview, faculty v.6 hours (95% CI 5, 6), and staff 4.four hours (95% CI 4, v). The boilerplate budget for interviewing per plan was $17,079 (±$19,474) with an additional $8,274 (±$nine,615) spent on recruitment activities. The average amount spent per bidder was $213 (±$360), with $111 (±$237) in additional funds used for recruitment. Programs were more likely to pay for interviewee meals (82%) and lodging (59%) than travel (iii%).

Conclusions: As individual programs face increasing pressure to demonstrate value for investment in recruiting, data generated by this national survey enables useful comparison to individual programs and sponsoring organizations. Results may besides contribute to national discussions most best practices in resident recruitment and ways to improve efficiency of the process.

Resident recruitment is one of the most important and enervating responsibilities of graduate medical instruction (GME) programs. In 2018, 557 family unit medicine residency programs offered 3,629 positions in the National Resident Matching Program (NRMP).1 A national survey of family medicine program directors (North=152) reported that programs received an average of 1,322 applications, equivalent to 189 per available position.2 On average, family unit medicine programs invited 113 applicants to interview, completed interviews with 85, and ranked 72 applicants for seven positions.2 The 2018 match was the largest on record both for applicants and positions offered.1 Although residency programs report already being overwhelmed with applications, increasing numbers of applications are predicted due to the synergistic outcome of larger numbers of applicants and increased numbers of applications made by each bidder.3

The resource demands of recruitment are among the master reasons for calls for process reform by programs in multiple specialties.3-xi Nonetheless, very piddling national information is available on the costs to programs of resident recruitment or the resource allocated past hospitals and other program sponsors. We identified only one relevant study, a 2009 survey of residency program directors in internal medicine (North=270). That report estimated a median price of $14,162 (range $ix,741-$22,605) to programs for each NRMP-matched position. The full included an boilerplate of $1,042 (range $733-$one,565) per completed interview.12 Community-based programs spent more university-affiliated programs, and university-based programs reported the lowest expenditures.12

Studies of pupil-reported expenses during residency interviewing prove that programs vary significantly in covering costs and providing reimbursement.13,14 Meals are the most mutual expenses covered by programs. Students report that programs seldom contribute to accommodation and rarely to travel expenses.13,fourteen In general, students report that family unit medicine programs provide more than fiscal and in-kind back up to interviewees than other specialties.13,14

The purpose of this report was to provide a national snapshot of estimated costs of resident recruitment among U.s.a. family medicine residency programs based on program characteristics such every bit region, size, and type (academic, community-based/university-affiliated, and customs-based/nonaffiliated). This study too sought to identify and quantify the master components of recruiting and interviewing expenses, including faculty, resident, and staff fourth dimension; reimbursement of travel expenses, lodging, and meals; and other costs such as recruitment at national conferences and recruitment materials. Our aim was to provide data to assist programs in securing and allocating resources to manage the increasingly challenging resident recruitment process. Study findings can also inform and stimulate national discussion of best practices in developing a more than effective and efficient process of resident recruitment.

Methods

The questions were office of a larger omnibus survey conducted biannually past the Quango of Academic Family Medicine (CAFM) Educational Research Alliance (CERA). 15 The CERA steering committee evaluated questions for consistency with the overall aim of the omnibus survey, readability, and existing evidence of reliability and validity. Pilot testing was done with family medicine educators who were not office of the target population. Questions were modified following pretesting for menstruum, timing, and readability. The American Academy of Family Physicians Institutional Review Lath approved the project in January 2018. Data were nerveless from Jan to February 2018.

The survey was sent to all programme directors leading family medicine programs accredited past the Accreditation Council for Graduate Medical Education (ACGME). Programs were identified by the Association of Family Medicine Residency Directors (AFMRD). Email invitations to participate included a link to the online survey. Vi follow-upwards emails were sent at weekly intervals to encourage nonrespondents to complete the survey. As part of the standard CERA survey, program directors were asked to place their residency programs as either university-based, customs-based/university-affiliated, community-based/nonaffiliated, or other (eg, war machine, customs wellness center); and to report the size of their programs past number of residents (less than 19, 19 to 31, or more than 31). In society to stratify programs based on region, US states were grouped into four geographic regions: Northeast (New Hampshire, Massachusetts, Maine, Vermont, Rhode Island, Connecticut, New York, Pennsylvania, and New Jersey), South (Florida, Georgia, South Carolina, Due north Carolina, Virginia, Washington, DC, West Virginia, Delaware, Maryland, Kentucky, Tennessee, Mississippi, Alabama, Oklahoma, Arkansas, Louisiana, and Texas), Midwest (Wisconsin, Michigan, Ohio, Indiana, Illinois, Due north Dakota, Minnesota, South Dakota, Iowa, Nebraska, Kansas, and Missouri), and West (Montana, Idaho, Wyoming, Nevada, Utah, Arizona, Colorado, New Mexico, Washington, Oregon, California, Alaska, and Hawaii). Program directors were also asked to report their total years of experience as a program managing director and time directing the electric current program.

Specific questions were asked for this project regarding how many interviews each program offered to applicants; how many interviews were completed; the size of the annual interview upkeep; additional funds spent on recruitment; whatsoever payments/reimbursements made for interviewee travel, lodging, and/or meals; and faculty, administrative staff, and resident hours used per completed applicant interview. Run into the Appendix (https://journals.stfm.org/media/2211/nilsen-appendix-fm2019.pdf) for specific CERA questions used in this study.

Any response that was identified as an outlier by SPSS in stem-and-leafage plots was removed from the data set up before analysis. This included responses over $99,000 in "boosted money used for recruitment," and whatever hours over 40 reported per interviewee for residents, kinesthesia, and staff. Thus, the number of responses and response rate varies slightly between questions. This conclusion was fabricated because we suspected that these were errors or metaphorical numbers, intended to represent a large amount, rather than actual numbers. Also, due to the pocket-sized number of "other" types of programs (eg, military, community wellness center), their data was excluded from this study to limit the possibility of their identification.

Descriptive analyses (means, standard deviations, confidence intervals, and percentages) were used to describe respondents by cardinal variables such as residency program region, program size, and program blazon, and also to describe the average fourth dimension and financial costs of interviewing. Chi square analyses were used to decide the frequency and per centum of responses based on the key variables, and to determine whether or non in that location was a statistically significant difference between them. Independent t -tests were used to determine if there were statistically pregnant differences between groups when compared to ane some other. Pearson correlations were as well used to determine if program director feel as defined by their total number of years in the position was related to the overall plan upkeep or expenditures. All data analyses were performed using SPSS version 24.0 (IBM, Armonk, NY) and Microsoft Excel.

Results

Population and Demographics

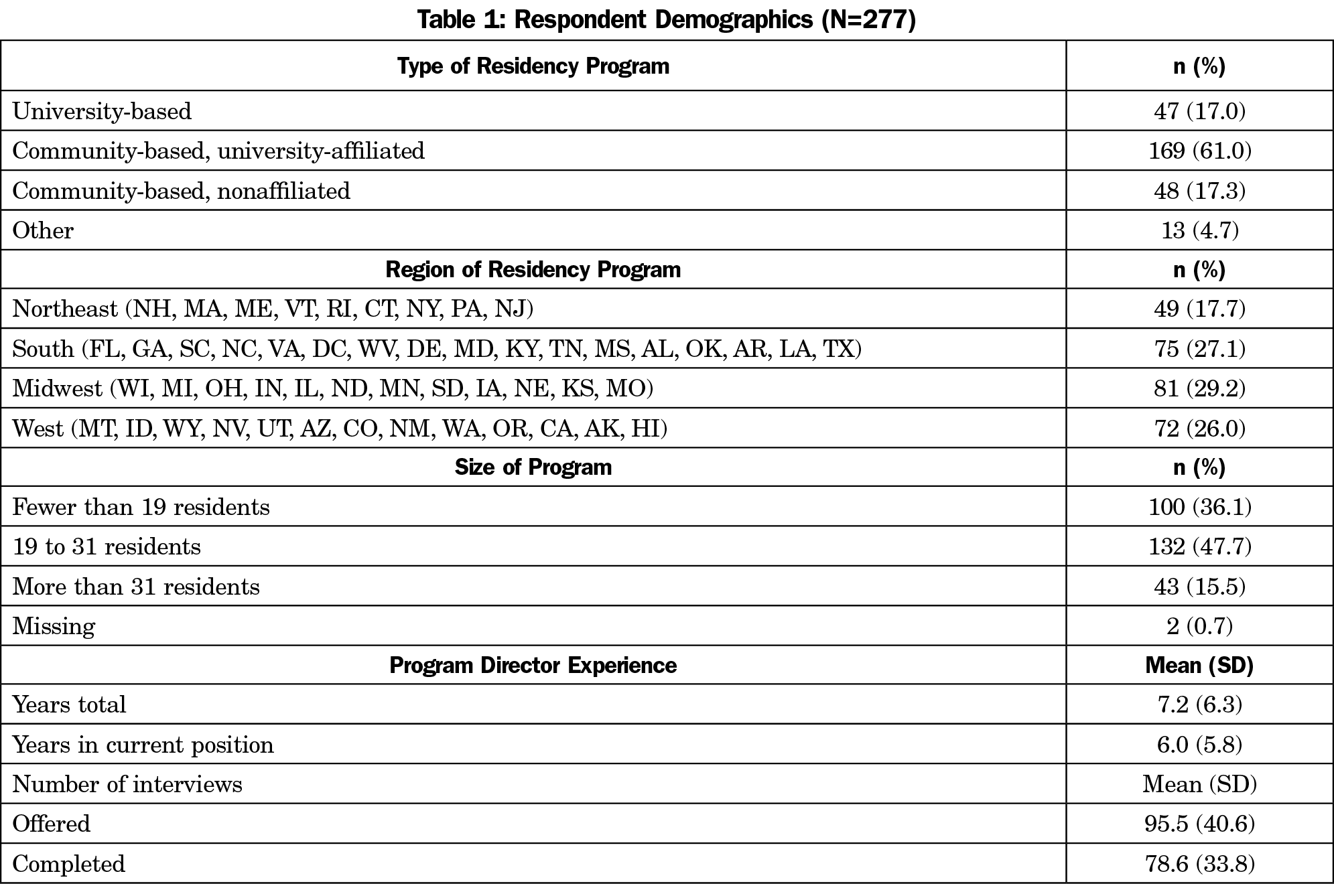

Of the 549 program directors identified by AFMRD, xiii had previously opted out of CERA surveys, and fourteen eastward-mails could not be delivered, resulting in a sample size of 522. The terminal response charge per unit for our survey questions was 53% (277/522). Respondents reported a range of less than 2 months to 33 years' experience as a program managing director, with a mode of 2 years and a mean of 7.2 (±vi.3) years (Tabular array 1).

The programs surveyed were mostly representative of programs nationwide, just customs-based/university-affiliated programs were underrepresented in the sample (61% vs 76.five% nationally; χ 2 [1, n=628]=xiv.8, P =.0001). University-based programs were overrepresented (17% vs 10%), merely this was not statistically significant. All regions were represented, with the largest proportion of responses coming from the Midwest (29%).

Overview of Resources Expenditures

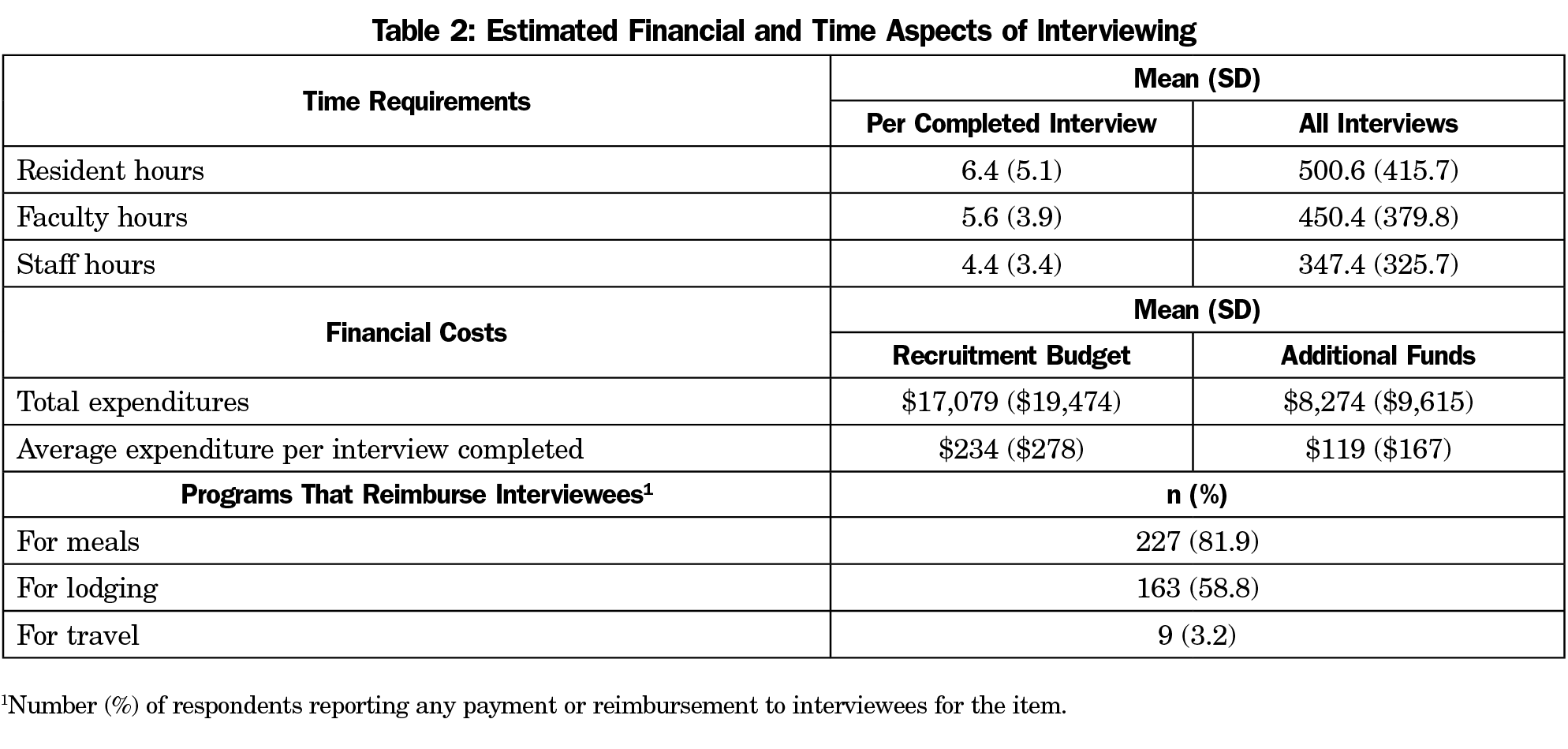

Time Delivery. In club to summate the overall time spent during the interview procedure, the number of hours each program manager estimated per individual interviewee for residents, faculty, and staff was multiplied by the number of completed interviews. Respondents reported offering between ii and 300 interviews (96±41) and completing betwixt two and 250 interviews (77±34). On average, plan directors estimated that residents devoted half-dozen.4 (95% CI 5.ix, 7.1) hours to each interviewee, and 501 (95% CI 452, 550) hours to all interviewees combined. Kinesthesia members were estimated to spend an boilerplate number of v.6 hours per interviewee (95% CI five.2–6.1), for a total of 450 (95% CI 405, 495) hours for all interviews; and staff an average of four.iv hours per interviewee (95% CI 4, 5), for a full of 347 (95% CI 313, 390) hours overall (Table 2).

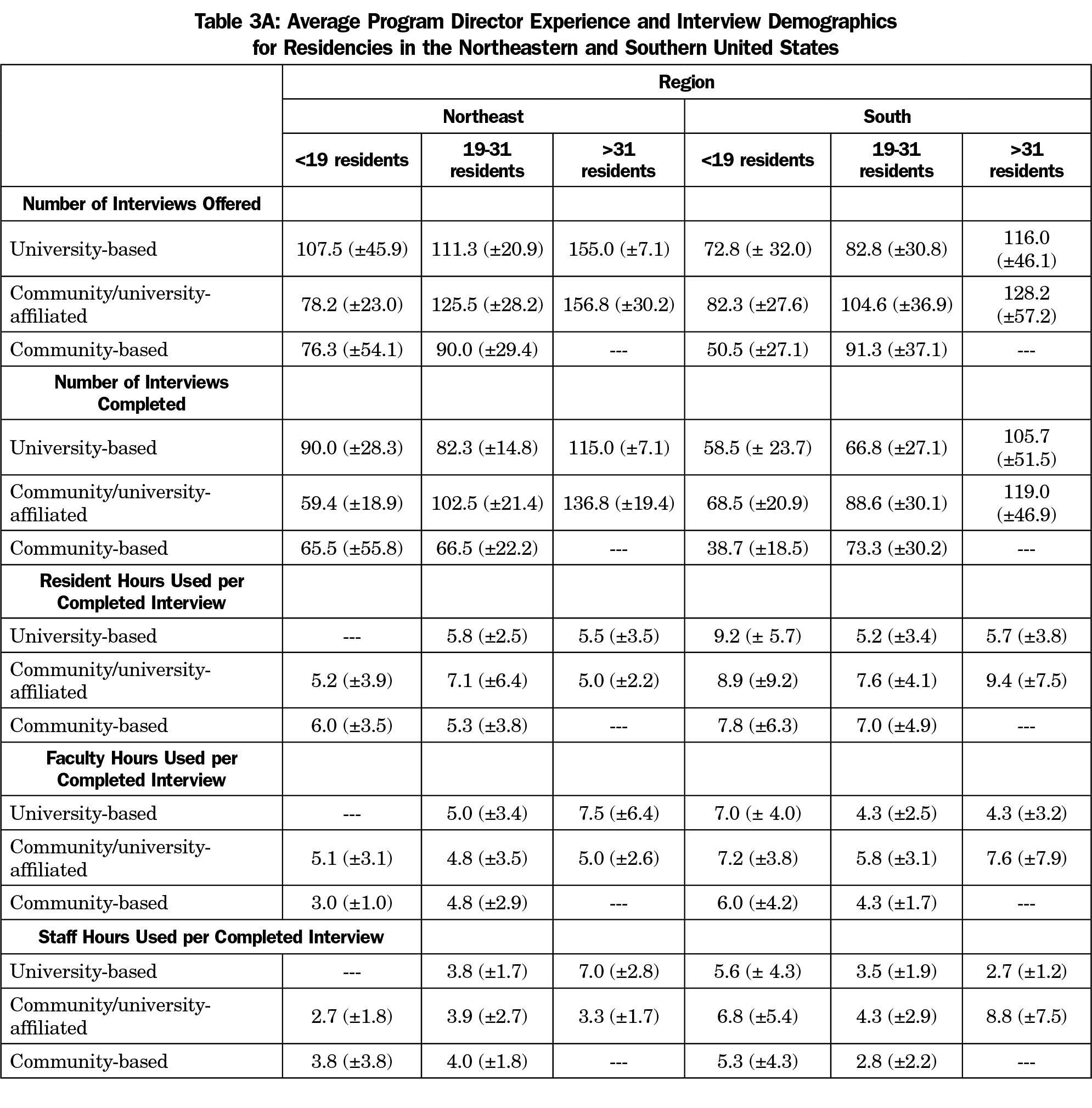

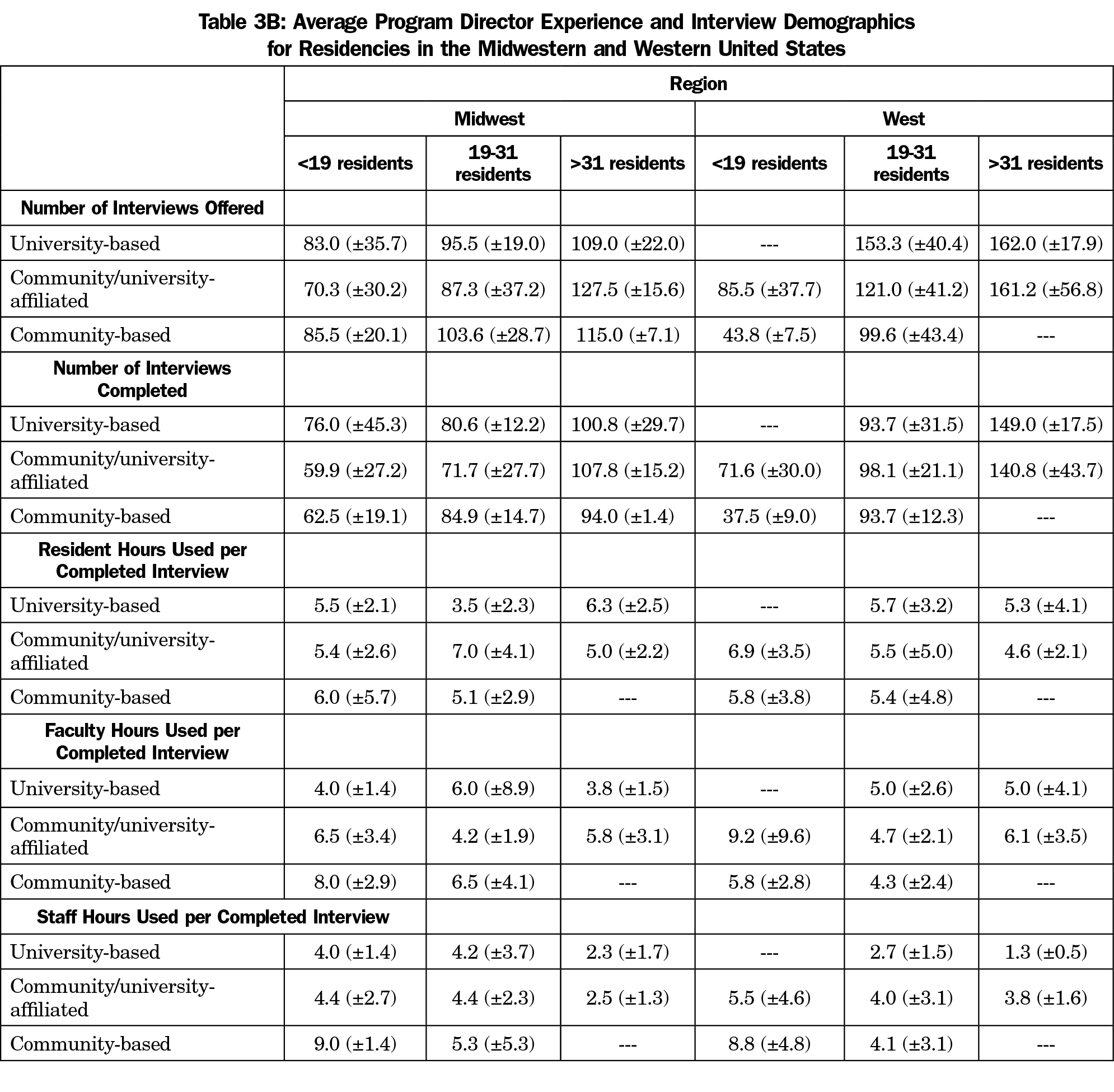

There were no statistically meaning differences in the amount of fourth dimension spent with interviewees based on geographic region. The everyman estimate for residents (4.half-dozen±2.i hours) was given by large community/university-affiliated programs in the western region and the highest (nine.4±7.5) hours past like programs in the southern region. For faculty time, the lowest estimates (four.0±1.4 hours) were from university-based programs with fewer than 19 residents in the Midwest and the highest (9.2±nine.6 hours) from community/university affiliated programs with fewer than 19 residents in the western region. Estimates of staff time ranged from a depression of 1.iii hours (±0.5) by large academy-based programs in the western region to a high of 9.0hours (±ane.iv) reported by small community-based programs in the mid-west. This divergence was statistically pregnant ( t [5]=7.7, P =.0001]. Tables 3A and 3B testify information by programme blazon, region, and size.

Budgets and Expenditures. The boilerplate amount spent per applicant regardless of interview status was $213 (±$360), with $111(±$237) in boosted funds used for recruitment. For each completed interview, $234 (±$278) was spent, with $119(±$167) in additional funds used for recruitment (Table 2). The largest interviewing budgets ($68,750±$68,144) were reported by big community/university-affiliated programs in the Northeast; these programs also reported the near additional expenditures devoted to recruitment ($27,250±$23,824). The smallest interview budgets ($4,025±$two,086) and additional expenditures ($1,000±$707) were reported past pocket-size university-based programs in the Northeast region. Nevertheless, due to the differences in the sizes of the programs, the expenditures betwixt the largest ($96,000±$91,898) and smallest ($5,025±$two,793) interview budgets was non statistically dissimilar ( t [4]=-1.three, P =0.26).

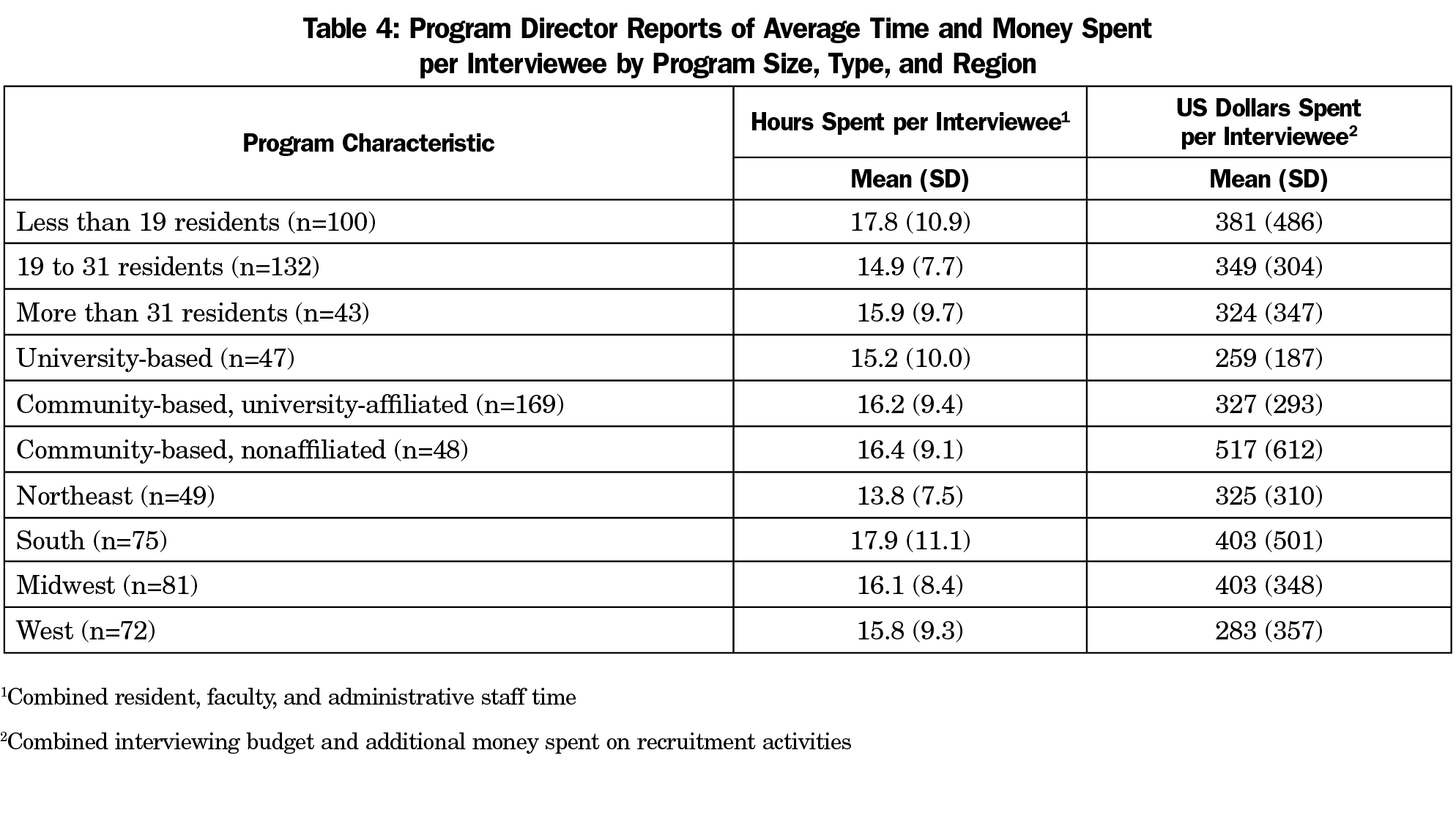

When combining the full residency interviewing budgets and additional recruitment coin spent, the only pregnant differences were seen between university-based and community-based/nonaffiliated programs ( t [93]=2.77, P =.007), community-based/academy-affiliated and community-based/nonaffiliated programs ( t [215]=3.01, P =.033), and betwixt programs in the Midwest and the West ( t [151]=-2.1, P =.04; Table 4]. Community-based/nonaffiliated programs spent more than either university-based or university-affiliated programs. Programs in the West spent less than those in the Midwest.

Using partial correlation to command for programme size, no significant correlations were found betwixt the number of years of program manager feel and the full interviewing budget ( r =.07, P =.27, n=271), or the additional money spent on recruitment ( r =.06, P=.34, north=271).

Payment for Lodging, Meals, or Travel. Programs were more probable to pay for interviewee meals (81.9%; χ 2 [1]=353.1, P <.0001, 95% CI 73.three to 83.iii) and lodging (58.8%; χ 2 [ane]=202.vii, P <.0001, 95% CI 49.five to 61.8) than travel (three.2%; Table 2). Using partial correlation to control for program size, no significant correlations were constitute between the number of years of program director experience and whether programs reimbursed applicants for travel ( r =.07, P =.25, due north=268), meals ( r =-.10, P =.11, northward=268), or lodging ( r =-.11, P =.08, due north=268).

Give-and-take

These information demonstrate the magnitude of program resources consumed by recruitment and interviewing for residents, with an average of half-dozen.four hours of resident time, 5.6 hours of kinesthesia time, and four.4 hours of authoritative staff fourth dimension, and an average of $234 per completed residency interview. Additionally, the bulk of family medicine residency programs (81.9%) involved in this written report paid for applicant meals during the interviewing process, and more than than half reimbursed for travel expenses (58.8%). Nonetheless, these information do not include boosted substantial costs to individuals and programs. Faculty and resident fourth dimension for recruitment and interviewing must compete with patient intendance, teaching, research, and other responsibilities. This time burden is likely unevenly distributed as programs are likely to rely more heavily on specific individuals whose personalities and interpersonal skills are suited to the interviewing procedure. Any adverse effects of recruiting and interviewing activities on the teaching of such residents have not been studied. Similarly, lost productivity of faculty in teaching, clinical service, administration, and research due to recruiting has not been quantified and may exist significantly higher for some individuals. Amidst staff, plan coordinators are often the first line in application screening, and are the primary contact for applicants for information, interview scheduling, and overall coordination. Infirmary systems often evaluate ACGME Plan Requirements for coordinator requirements without considering the essential role of the coordinator in the overall recruitment try.

Also, the similar internal medicine written report by Brummond and colleagues12 showed an average expense of $1,042 (range: $733-$1,565) per completed interview, just this is due to the fact that the Brummond data accounts for the bodily monetary price of the time that faculty, residents, and staff spent on interviews, whereas our study did non. If the price of the resident, faculty, and administrative staff time were included, the actual toll of recruitment and interviewing for family unit medicine residencies would be much higher. Overall, interviewing budgets (average of $17,079), combined with additional funds used for recruitment (average of $8,274) do not convey the enormous time investment for residents, faculty, and staff.

Our findings testify differences in expenditures and time commitment for resident recruitment based on program region, blazon, and size. The number of hours spent in resident recruitment of resident, faculty, and staff in the South region was much greater in programs fewer than xix residents than those with 19 residents or more. Similarly, the number of hours of faculty and staff time spent in resident recruitment in Midwest and West region was much greater in programs fewer than nineteen residents than those with nineteen residents or more. Smaller programs may be spending more than time in recruitment activities if just one or two people are assigned to review applications and interview applicants, as opposed to large programs which may be able to spread the workload out amidst more people. Programs also varied considerably in payment for interviewee lodging. Frequent payment for travel was but reported for university-based programs in the northeast region. Programme director experience does not appear to be significantly related to the volume or types of resource use reported.

Generalization of our findings is limited by the response rate and underrepresentation of community-based/university-affiliated programs in our sample. In addition, data are self-reported and no literature or external information is available to validate program directors' reports of budgets and/or fourth dimension spent. These information also simply cover the programme resource consumed past interviewing, which is but a portion of the resources required for recruitment. There is also significant time spent reviewing applications for students who are non interviewed, every bit well as the time spent on residency fairs, local student events, and second-expect experiences. The report is also limited by the inablity to correlate the information to the number of PGY-i positions recruited for in each program.

However, the information generated past this national survey should exist useful to individual programs and sponsoring organizations by providing comparisons amidst programs with similar characteristics. A prior written report with fourth-year medical students applying to multiple specialties discovered that at least some students apply to all the residency programs they can, and and then sort them out later, fifty-fifty using some interviews as practice for "more important" interviews!sixteen This seems similar a huge drain on resources that could be used in other means. The results of this study, and others like information technology, may contribute to national discussions concerning evolution of best practices in resident recruitment and ways to better the efficiency of the procedure. These discussions may assist reform the process of residency interviewing by determining the scope of the time and cost commitment by both programs and applicants, and finding ways to take the burden off of both groups by limiting the number of residencies to which an applicant can apply.

Medical students would be required to exercise their due diligence prior to applying to multiple residencies, and then but apply to programs they actually desire to attend. This would lower the number of applications submitted, decrease the number of applicants that would need to be screened and offered an interview, and ultimately decrease the number of interviews completed. Certainly, freeing up more than time for clinical duties for both residents and faculty would positively impact the residencies financially, too as freeing up more time for residency education.

Despite the caveats above, this study provides the beginning national clarification of recruitment expenses and time commitments. It must be stressed that this is purely a large motion-picture show descriptive report of current practices as reported past survey participants. The results should not be interpreted equally optimal expenditures for resident recruitment or established every bit goals for individual programs. Deciding the optimal investment in resident recruitment for an private program is a complex and challenging process, driven past local as well as national considerations. Even inside a unmarried geographic region, programs of similar size and types may crave very different budgets and resources for optimal recruitment. Much more than research is needed on this issue, both at the national and local levels. Studies are besides needed to compare recruitment costs and practices among specialties in lodge to develop best practices for all programs nationwide.

Acknowledgments

The authors thank the faculty and staff who support the CERA plan, as well every bit Dr Philip Dooley for his feedback.

References

- National Resident Matching Program. Results and Information: 2018 Master Residency Lucifer. Washington, DC: National Resident Matching Program; 2018.

- National Resident Matching Program, Data Release and Enquiry Committee. Results of the 2018 NRMP Program Manager Survey. Washington, DC: National Resident Matching Program; 2018.

- Gliatto P, Karani R. The residency application procedure: working well, needs fixing, or broken beyond repair? J Grad Med Educ. 2016;viii(3):307-310. https://doi.org/10.4300/JGME-D-16-00230.i

- Aagaard EM, Abaza One thousand. The residency awarding process‑burden and consequences. Due north Engl J Med. 2016;374(4):303-305. https://doi.org/10.1056/NEJMp1510394

- Claiborne JR, Crantford JC, Swett KR, David LR. The plastic surgery friction match: predicting success and improving the process. Ann Plast Surg. 2013;70(half-dozen):698-703. https://doi.org/x.1097/SAP.0b013e31828587d3

- Chang CW, Erhardt BF. Ascension residency applications: how high volition it go? Otolaryngol Head Neck Surg. 2015;153(v):702-705. https://doi.org/x.1177/0194599815597216

- Weissbart SJ, Kim SJ, Feinn RS, Stock JA. Human relationship betwixt the number of residency applications and the yearly match rate: fourth dimension to start thinking about an application limit? J Grad Med Educ. 2015;seven(1):81-85. https://doi.org/10.4300/JGME-D-14-00270.1

- Naclerio RM, Pinto JM, Baroody FM. Drowning in applications for residency training: a program'southward perspective and uncomplicated solutions. JAMA Otolaryngol Head Cervix Surg. 2014;140(eight):695-696. https://doi.org/ten.1001/jamaoto.2014.1127

- Malafa MM, Nagarkar PA, Janis JE. Insights from the San Francisco Match rank list information: how many interviews does it have to lucifer? Ann Plast Surg. 2014;72(v):584-588. https://doi.org/10.1097/SAP.0000000000000185

- Baroody FM, Pinto JM, Naclerio RM. Otolaryngology (urban) legend: the more programs to which you utilise, the improve the chances of matching. Arch Otolaryngol Caput Neck Surg. 2008;134(10):1038-1039. https://doi.org/10.1001/archotol.134.x.1038

- Katsufrakis PJ, Uhler TA, Jones LD. The residency awarding process: pursuing improved outcomes through better understanding of the issues. Acad Med. 2016;91(11):1483-1487. https://doi.org/10.1097/ACM.0000000000001411

- Brummond A, Sefcik S, Halvorsen AJ, et al. Resident recruitment costs: a national survey of internal medicine programme directors. Am J Med. 2013;126(7):646-653. https://doi.org/ten.1016/j.amjmed.2013.03.018

- Callaway P, Melhado T, Walling A, Groskurth J. Financial and time burdens for medical students interviewing for residency. Fam Med. 2017;49(2):137-140.

- Walling A, Nilsen Grand, Callaway P, et al. Pupil expenses in residency interviewing. Kans J Med. 2017;10(three):1-15.

- Seehusen DA, Mainous AG III, Chessman AW. Creating a Centralized Infrastructure to Facilitate Medical Education Research. Ann Fam Med. 2018;xvi(3):257-260. https://doi.org/10.1370/afm.2228

- Nilsen, KM, Walling A, Callaway P. Unruh G, Scripter C, Meyer Thousand, Grothusen J, King S. "The End Game"- Pupil perspectives on the MATCH: A focus group report. Med Sci Ed. 2018;28(iv):729-737. https://doi.org/10.1007/s40670-018-0627-1.

Source: https://journals.stfm.org/familymedicine/2019/may/nilsen-2018-0362/#:~:text=Time%20Commitment.&text=Respondents%20reported%20offering%20between%20two,hours%20to%20all%20interviewees%20combined.

0 Response to "How Many Interviews for Residency Comminity Family Medicine"

Enregistrer un commentaire